【Release】 Entering the Era of In-House Generative AI: Introducing the Fully On-Premise InfiniCloud AI Appliance

Announcing the InfiniCloud AI Appliance: A Fully On-Premise Solution for the New Era of In-House, Owned-and-Developed Generative AI

Starting August 2025, InfiniCloud has launched the InfiniCloud AI Appliance, a generative AI product line designed to be fully owned, developed, and operated within your organization.

The InfiniCloud AI Series is built on three core principles:

Zero Cloud Dependency

Give your company full control with a self-contained AI solution that runs entirely on your infrastructure and belongs only to you.

Custom AI Designed for Your Business

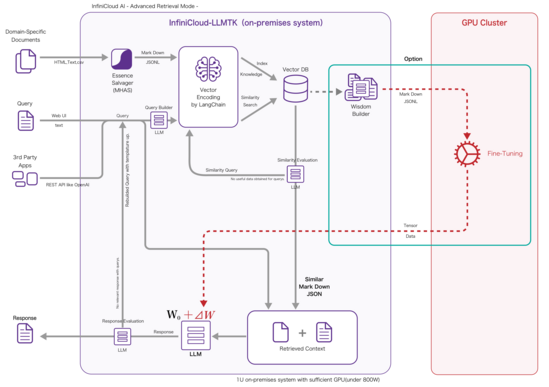

Create AI models tailored to your specific industry and operations, using tools like Retrieval Augmented Generation (RAG) and model fine-tuning.

Simple to Start, Ready to Grow

Easy to implement from the first day, with the flexibility to expand as your needs evolve over time.

In Japan, Large Language Models (LLMs) are increasingly being adopted across a wide range of industries. However, most current implementations depend on cloud-based LLMs, limiting their abilities to be truly customized for specific business fields.

While tools like Retrieval-Augmented Generation (RAG) can provide supplemental knowledge, they fall short when it comes to creating deeply specialized AI models. This has led to a global shift toward building and deploying language models of varying sizes, tailored for specific use cases.

Where InfiniCloud AI Stands Apart

InfiniCloud AI is designed from the ground up to be domain-specific. It moves beyond simply retrieving information and enables true specialization through fine-tuning. This allows businesses to embed not just knowledge, but domain-specific intelligence into their AI systems. The result is an AI that doesn't just know, but also understands, adapts, and acts with insight aligned to your business needs.

InfiniCloud specializes in infrastructure optimization, providing the on-premise cloud appliance InfiniCloudPCA and virtualization platform InfiniCloud HV, built on its proprietary High Response Private Cloud.

In addition to its infrastructure expertise, InfiniCloud has developed WIKIPLUS, a proprietary WIKI format CMS. Through this platform, the company has built the capability to generate high quality training data for modern LLMs. This data uses a format similar to Markdown and focuses on structuring the semantics of web content, allowing AI to better capture and understand document meaning.

By combining its strengths in infrastructure, virtualization, and data generation, InfiniCloud has created the InfiniCloud AI Appliance, a complete LLM platform that can be fully owned, operated, and developed within an organization.

Note: The WIKI format is a document style very similar to Markdown.

Effective Use Cases

- Secure Internal Knowledge Search: In-house AI that understands and searches manuals and business documents, answers natural language questions, reduces reliance on individuals, improves search efficiency, and lowers training costs.

- Automated Document Summarization and Internal Q&A: Summarizes meeting materials and reports, handles internal inquiries, and boosts information reuse and response speed.

- Collaborative Development with in-House Systems: OpenAI-compatible API, it easily integrates with existing AI-connected applications.

- Support for Multiple LLMs as Base Models: A range of preset language models is supported, including OpenAI GPT OSS, Alibaba Qwen, Swallow from Tokyo University of Science, and InfiniCloud’s fine-tuned models.

InfiniCloud AI Appliance: Leading the AI Series, Available from August 2025

The InfiniCloud AI Appliance is the first product in the InfiniCloud AI series. This fully on-premise 1U server fits easily into a standard data center rack and consumes minimal power. Featuring a user-friendly chat-style web interface, it allows companies to fully own their AI without sending data outside the organization and to customize it to their specific business domain or industry.

Features | InfiniCloud AI Appliance

- Completely on-premise and self-contained within the company: no external communication required. Ideal for high-security environments such as finance and manufacturing.

- 1U chassis with an air-cooled design consuming less than 800 watts: suitable for installation in urban data centers or existing racks.

- Equipped with a chat-style web UI called Shiraito: a ready-to-use interface fully compatible with Japanese.

- Includes an OpenAI compatible API: easily integrates with your own SaaS and business systems.

- Comes with lightweight domain-specific large language models: includes redistributable models such as OpenAI GPT OSS, Alibaba Qwen, and Swallow from Tokyo University of Science’s School of Computing. Models can be optimized to your company’s knowledge and writing style.

- Supports GPU cluster integration for future expansion: enables scalable learning performance.

Roadmap | InfiniCloud AI Series Deployment

| Dates | Products/Services | Details |

|---|---|---|

| August 2025 | InfiniCloud AIA Model S | InfiniCloud PCA-based on-premise AI appliance (starting at 4 million yen) |

| infinicloud-llmtk (OSS) | Open source software release of knowledge extraction engine for LLM (compatible with LangChain) | |

| October 2025 | InfiniCloud Private LLMaaS | Dedicated instance-based cloud-generated AI service (Dedicated LLM) |

| December 2025 | InfiniCloud AIA Model M | Higher performance on-premise AI appliances |

| InfiniCloud AI FTaaS | A cloud-based service for fine-tuning AI | |

| Shiraito (OSS) | Chat UI and integrated AI engine released as open source | |

| Early 2026 | Shiraito Ver.2 | Upgrade as an integrated platform |

| InfiniCloud AIAFT-Addon | Additional GPU module for advanced distributed training |

About Shiraito & infinicloud-llmtk (Upcoming Releases)

- Shiraito: A platform combining a web-based chat user interface with an AI engine. It will be released as open source software under the Apache license.

- infinicloud-llmtk: A lightweight module designed to extract and structure document knowledge. This toolkit supports Retrieval-Augmented Generation (RAG) with LangChain integration and facilitates data creation for fine-tuning. It will also be released as open source software under the Apache license.

- Updates & Expansions: Free software updates will be provided. Hardware expansion options will be available for an additional fee.

Learn More About InfiniCloud AI

Secure your competitive edge with InfiniCloud AI